On the evening of November 8, 1895, an accidental discovery ushered in a scientific and medical revolution that would allow us to see inside the living human body for the first time.

While conducting an experiment with cathode rays, Wilhelm Roentgen, Ph.D., noticed a strange glow on a distant cardboard screen. Knowing that cathode rays could not pass through the obstacles between his cathode ray tube and the glow, he proposed the existence of a novel type of penetrating ray, which he called the “X-ray.” After spending several solitary weeks analyzing the rays, Roentgen published his findings in late December, along with an eerie X-ray photograph (radiograph) of his wife’s hand.

Roentgen’s discovery opened up the human body without a single incision. Soon, the medical profession was using X-rays to locate lodged bullets and bone fractures. With the further refinement of the technology and the development of contrast agents, even soft tissues came into focus.

Rock ‘n’ roll

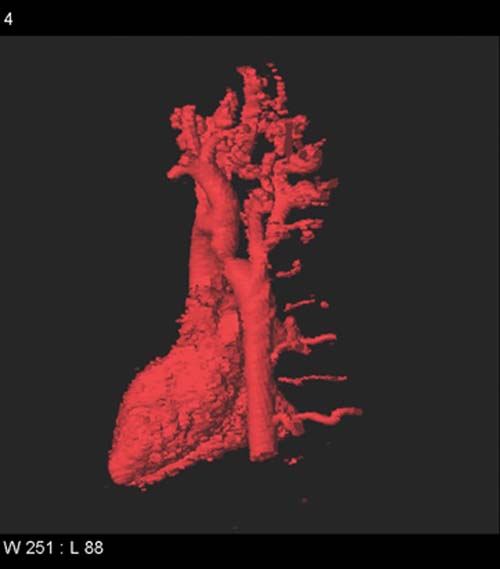

Despite the improved resolution of contrast-enhanced X-ray images, overlying bones obscured some parts of the body from view. By moving the X-ray tube and film in tandem, bones that stood in the way were blurred out and a single cross-sectional slice through the body was highlighted.

This new technique, called tomography, was first described by the Dutch radiologist Bernard Ziedses des Plantes in 1931. Tomography could produce a series of images that could be stacked to give the physician information about volume. The first commercial tomograph, called the laminagraph, was built at the Mallinckrodt Institute of Radiology at Washington University in St. Louis in 1937.

Although laminagraphs are related to modern computed tomography (CT), such technology had to await the dawn of the computer age. A major player was a London-based electronics firm, Electric and Musical Industries Limited (EMI), perhaps best known as the Beatles’ record label.

Supported in part by the sales of Beatles records, the EMI group, led by Godfrey Newbold Hounsfield, developed and brought the first CT scanner to market in 1971. As image resolution improved and scanning speed increased, the CT scan soon became the “standard of care” for suspected brain disorders. It has since become a powerful method for imaging the body as well.

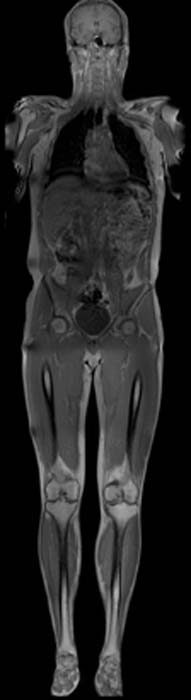

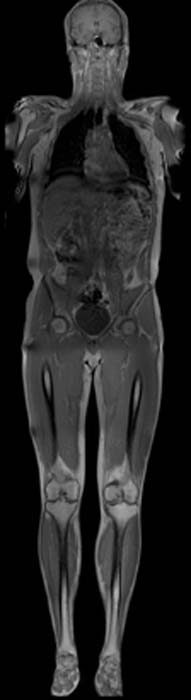

Over the seven decades that passed between the first X-ray devices and the modern version of CT, the basis for the next wave of medical imaging—one that didn’t involve harmful radiation—was slowly taking shape.

Resonance

In the late 1930s, physicists discovered that atomic nuclei containing odd numbers of protons (such as hydrogen) would align themselves with a strong magnetic field and revert to their original state, or “relax,” when the field was turned off. This change could be detected by the radiofrequency waves given off in the process.

Since bodily tissues differ in their water content (and consequently, hydrogen content), scientists realized that this “nuclear magnetic resonance,” or NMR, could be used to distinguish between soft tissues—and possibly to detect disease.

In 1971, Raymond Damadian, M.D., a physician at Downstate Medical School in Brooklyn, N.Y., used NMR to distinguish excised cancerous tissue from normal, healthy tissue.

Two years later, Paul Lauterbur, Ph.D., a chemist at the State University of New York at Stony Brook, introduced rotating magnetic field gradients and computer algorithms to assemble a two-dimensional image from NMR data. Using this technique, he produced the first NMR image of a living subject, a clam.

Damadian and colleagues followed in 1977 with the first NMR image of a human subject.

Peter Mansfield, Ph.D., a physicist at the University of Nottingham in England, developed mathematical calculations that allowed faster acquisition of the NMR image. His work led to the “fast” or “functional” MRI (fMRI), which could acquire images at the rate of 30 to 100 frames per second.

In 1989, Seiji Ogawa, Ph.D., a physicist at AT&T Bell Laboratory in New Jersey, described the phenomenon—called Blood Oxygenation Level Dependent (BOLD) effects—that forms the basis for functional MRI (fMRI). The changes in oxygenation of blood hemoglobin in “activated” brain regions perturb the local magnetic environment, serving as a natural contrast agent.

Since changes in the BOLD signal depend on the changes in blood flow and oxygenation, fMRI provided a measure of brain activity and the unparalleled ability to safely and non-invasively probe the physiological basis of neurological and psychological disorders as well as normal cognitive function.

Medical ‘Geiger counters’

Despite the attractiveness of MR as a radiation-free, and presumably safe, imaging technique, nuclear technology has spawned some of the most sensitive and powerful imaging methods to date—single photon emission computed tomography (SPECT) and positron emission tomography (PET).

The discovery of naturally-occurring radioactive elements, like uranium, polonium and radium, in the late 19th century sparked the “atomic age.” However, these naturally radioactive elements are not normally found in the body, so their medical use was limited.

In 1934, the creation of artificial radioisotopes of normally non-radioactive elements common in the body (including carbon, oxygen, nitrogen and fluorine) gave physicians the tools they needed to adapt radioactive compounds for medical purposes. As these radioisotopes decayed, they produced gamma rays, which could be detected with a Geiger counter.

Deriving an image was not the priority at first; the goal was to detect “hot spots” in the body where the radioactive compounds accumulated. But, in 1951, Benedict Cassen, Ph.D., at UCLA built the scintiscanner, a device that scanned the body using pen-sized gamma ray detectors and created a crude print-out of those hot spots. Acquiring a usable image from these radioactive compounds suddenly seemed possible.

In 1968, the first nuclear imaging machine, single photon emission computed tomography (SPECT) was built. However, the seminal advance in nuclear imaging came in 1975, when Michael Phelps, Ph.D., and Edward Hoffman, Ph.D., at Washington University in St. Louis reported their development of PETT (positron emission transaxial tomography), later shortened to “PET.”

When a positron emitted by a decaying radioisotope collides with a nearby electron, two gamma rays traveling in opposite directions are produced. Using a hexagonal array of gamma detectors and computational methods similar to those that generated CT images, the Washington University scientists built a device that could construct three-dimensional “maps” of positron emission deep within the body.

PET was primarily used for research purposes until 1979, when another milestone—the development of radioactive FDG (fluorodeoxyglucose), a glucose analog—further propelled the technology into the medical field. With FDG, physicians can track the metabolic activity of cells, aiding in the diagnosis of cancer and other diseases.

Smiling in the womb

In 1877, the discovery of piezoelectricity laid the foundation for one of the safest and most economical methods of seeing into the body—ultrasound.

Piezoelectric crystals generate a voltage in response to applied mechanical stress, including sound waves. During World War I, they were used in the first sonar devices to detect sound waves bounced off enemy submarines.

In 1937, sound waves were first transmitted through a patient’s head to derive a crude image of the brain. Ultrasound ultimately found its niche in obstetrics and gynecology in the 1950s following reports of the damaging effects of X-rays on the fetus.

Since then, ultrasound has morphed from flat black-and-white, two-dimensional images intelligible only to trained professionals to the sharp and startling 4-D “movies” of the fetus kicking, yawning and smiling in the womb.

Because ultrasound also can capture the movements of heart muscle and valves, an application called echocardiography is now used to examine the heart—before and after birth.